#MS Research. Animal studies trend to go more trial-like.

I was asked this week what sources of information do you trust. I said definitely not the media.....and the best judgement is to learn the science and read the papers yourself. However, this is not easy especially if you learn bad practises.

I was asked this week what sources of information do you trust. I said definitely not the media.....and the best judgement is to learn the science and read the papers yourself. However, this is not easy especially if you learn bad practises.

However, in clinical studies, which are often small in nature, there has been a tendency for people to say that when P<0.1 there is a trend rather than say there was no statistically significant difference. So being positive when it really is negative.

OK you can reject a null hypothesis when there is in fact a real biological difference or accept a null hypothesis that there is no difference between observations when there is a biological difference these are called Type I and Type II errors.

Now more human trial-like animal studies arrives in Nature Journals, leading us into more bad science:-).

So in a recent paper it states about statistical analysis

OK you can reject a null hypothesis when there is in fact a real biological difference or accept a null hypothesis that there is no difference between observations when there is a biological difference these are called Type I and Type II errors.

| Null hypothesis (H0) is | |||

|---|---|---|---|

| Valid/True | Invalid/False | ||

| Judgement of Null Hypothesis (H0) | Reject | Type I error False Positive | Correct inference True Positive |

| Accept (fail to reject) | Correct inference True Negative | Type II error False negative | |

So in a recent paper it states about statistical analysis

"Unless stated otherwise, non-paired Student’s t-tests were performed for statistical analysis. P values <0.05 are indicated by *P values <0.005 by **P values <0.1 were considered as a trend".

Maybe the referee is a clinician, who else would accept this?, and now failure becomes a trend:-(

Maybe the referee is a clinician, who else would accept this?, and now failure becomes a trend:-(

Maybe power the study to get the answer, but we know Nature Journals have not been good at this

Two years later: journals are not yet enforcing the ARRIVE guidelines on reporting standards for pre-clinical animal studies. Baker D, Lidster K, Sottomayor A, Amor S. PLoS Biol. 2014 Jan;12(1):e1001756. There is growing concern that poor experimental design and lack of transparent reporting contribute to the frequent failure of pre-clinical animal studies to translate into treatments for human disease. In 2010, the Animal Research: Reporting of In Vivo Experiments (ARRIVE) guidelines were introduced to help improve reporting standards. They were published in PLOS Biology and endorsed by funding agencies and publishers and their journals, including PLOS, Nature research journals, and other top-tier journals. Yet our analysis of papers published in PLOS and Nature journals indicates that there has been very little improvement in reporting standards since then. This suggests that authors, referees, and editors generally are ignoring guidelines, and the editorial endorsement is yet to be effectively implemented.

When analysed, less than 5% of animal studies do a power calculation. This is because animal studies that are published are invariably positive as there is a publication bias of positive results.

In that paper we asked whether the data was analysed incorrectly.

EAE scoring is an scale of limp tail, limp legs and a random score is given to these features this type of data is not continuous this is called non parametric data and you should use non parametric statistics to analyse. Other data like height are continuous such as there are measures between 1m and to 2m say such as 1.5m, 1.24m, 1.23m. EAE data on the whole should not be analysed with parametric (e.g. T tests) but should use non-parametric data. We looked in the literature and found that in many cases parametric statistics are wrongly used. This occurred in the top journal (These have a high impact factor)

Two years later: journals are not yet enforcing the ARRIVE guidelines on reporting standards for pre-clinical animal studies. Baker D, Lidster K, Sottomayor A, Amor S. PLoS Biol. 2014 Jan;12(1):e1001756. There is growing concern that poor experimental design and lack of transparent reporting contribute to the frequent failure of pre-clinical animal studies to translate into treatments for human disease. In 2010, the Animal Research: Reporting of In Vivo Experiments (ARRIVE) guidelines were introduced to help improve reporting standards. They were published in PLOS Biology and endorsed by funding agencies and publishers and their journals, including PLOS, Nature research journals, and other top-tier journals. Yet our analysis of papers published in PLOS and Nature journals indicates that there has been very little improvement in reporting standards since then. This suggests that authors, referees, and editors generally are ignoring guidelines, and the editorial endorsement is yet to be effectively implemented.

When analysed, less than 5% of animal studies do a power calculation. This is because animal studies that are published are invariably positive as there is a publication bias of positive results.

In that paper we asked whether the data was analysed incorrectly.

EAE scoring is an scale of limp tail, limp legs and a random score is given to these features this type of data is not continuous this is called non parametric data and you should use non parametric statistics to analyse. Other data like height are continuous such as there are measures between 1m and to 2m say such as 1.5m, 1.24m, 1.23m. EAE data on the whole should not be analysed with parametric (e.g. T tests) but should use non-parametric data. We looked in the literature and found that in many cases parametric statistics are wrongly used. This occurred in the top journal (These have a high impact factor)

Overall it was about half the time in Journals, but in the Nature/ Science Journals it was wrong in about 95% of cases. So they are setting the bad example

Baker D, Lidster K, Sottomayor A, Amor S. Reproducibility: Research-reporting standards fall short. Nature. 2012 Dec 6;492(7427):41. doi: 10.1038/492041a

After we called them on this, they introduced reporting standards. Did they and their referees learn about the statistics?

So recently we got this figure in a Nature Journal and the work was splashed across the media..........The great new hope.

So we looked as the data

Figure legend. Clinical scores of two independent EAE experiments at d23 post disease induction. Individual scores as well as the mean score of two independent experiments are shown. Control: n=10, vehicle: n=13, xxxxx-345: n=11. Control versus vehicle: P=0.620, control versus xxxxx-345: P=0.017, vehicle versus xxxxx-345 P=0.029. * indicate P values <0.05 and ** indicate P values <0.005 based on a non-paired Student’s t test. Error bars are s.e.m.

There is a thought in clinical trial studies that you should supply the primary data so it can be re-analysed. Many companies now willingly do this. This probably will occur or science papers too.

So in the figure above they provide primary data. In the drug-treated animals the scores appear to be: 0, 0 ,0, 0.5, 0.5, 2, 2.5, 3, 3.5, 3.5, 3.5 n=11 in vehicle scores appear to be: 0.5, 2.5, 2.5, 2.5, 2,75, 2.75, 3, 3.5, 3.5, 3.5, 3.5, 3.5, 3.5 n=13.

Do a t test drug verses vehicle and you get p=0.029 (as in the legend to the figure above) so all is fine and drug is great. The media go mad.....an its the next best thing since sliced bread:-)

Baker D, Lidster K, Sottomayor A, Amor S. Reproducibility: Research-reporting standards fall short. Nature. 2012 Dec 6;492(7427):41. doi: 10.1038/492041a

After we called them on this, they introduced reporting standards. Did they and their referees learn about the statistics?

So recently we got this figure in a Nature Journal and the work was splashed across the media..........The great new hope.

So we looked as the data

Figure legend. Clinical scores of two independent EAE experiments at d23 post disease induction. Individual scores as well as the mean score of two independent experiments are shown. Control: n=10, vehicle: n=13, xxxxx-345: n=11. Control versus vehicle: P=0.620, control versus xxxxx-345: P=0.017, vehicle versus xxxxx-345 P=0.029. * indicate P values <0.05 and ** indicate P values <0.005 based on a non-paired Student’s t test. Error bars are s.e.m.

There is a thought in clinical trial studies that you should supply the primary data so it can be re-analysed. Many companies now willingly do this. This probably will occur or science papers too.

So in the figure above they provide primary data. In the drug-treated animals the scores appear to be: 0, 0 ,0, 0.5, 0.5, 2, 2.5, 3, 3.5, 3.5, 3.5 n=11 in vehicle scores appear to be: 0.5, 2.5, 2.5, 2.5, 2,75, 2.75, 3, 3.5, 3.5, 3.5, 3.5, 3.5, 3.5 n=13.

Do a t test drug verses vehicle and you get p=0.029 (as in the legend to the figure above) so all is fine and drug is great. The media go mad.....an its the next best thing since sliced bread:-)

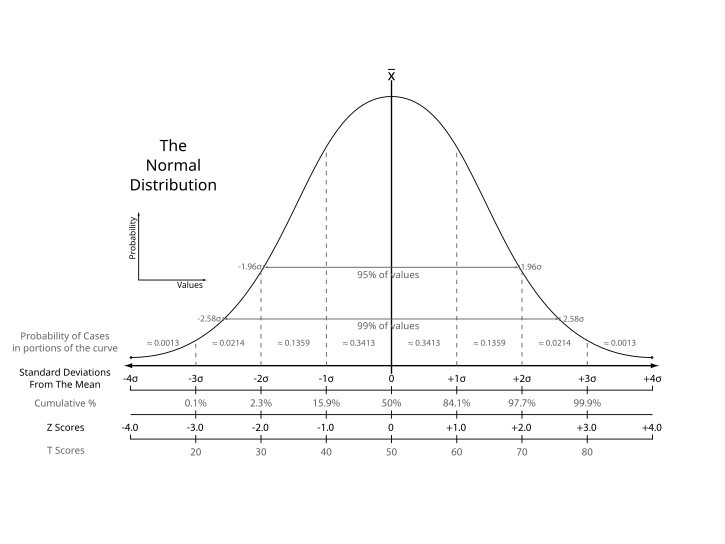

However, you should not do a t test on this type of data. The assumptions of a t test as that the data is (a) normally distributed. You test this and it passes the test p=0.152,

However, it also assumes that (b) data groups have equal variances (the square of standard deviation). Test for that and it fails P<0.05. So it is not valid to do a t test on this data but importantly it is not valid to do a t test on this data, because the data is not parametric, it is non-parametric.

So do a non-parametric test on the data like the Mann Whitney U test . This has less power to detect differences than a t test.

Do this on the data above and P=0.082.........Ooooops.

So now do drug verses untreated as well and this also fails P=0.121, so not even a trend:-)

However, it also assumes that (b) data groups have equal variances (the square of standard deviation). Test for that and it fails P<0.05. So it is not valid to do a t test on this data but importantly it is not valid to do a t test on this data, because the data is not parametric, it is non-parametric.

So do a non-parametric test on the data like the Mann Whitney U test . This has less power to detect differences than a t test.

Do this on the data above and P=0.082.........Ooooops.

So now do drug verses untreated as well and this also fails P=0.121, so not even a trend:-)

There is no statistically significant effect. The drug has not worked! So you accept this or do more studies to show if this so called trend is real or not, harder to do in humans, much easier to do in animal studies.

Simple school-boy stuff. All the reviewers need to do is read:

Baker D, Amor S.Publication guidelines for refereeing and reporting on animal use in experimental autoimmune encephalomyelitis. J Neuroimmunol. 2012 ;242(1-2):78-83.

Hopefully, this will get the altimetrics up on the old papers:-)

I suspect many of you won't be bothered by this post,but if you were pinning your hopes on drug xxxxx-345 then these hopes are being dashed. This type of detective work can not normally be done because we do not get to see the primary data.

This is not a rare example..but a new one to use in my teaching on experimental design 1O1.

Hopefully, this will get the altimetrics up on the old papers:-)

I suspect many of you won't be bothered by this post,but if you were pinning your hopes on drug xxxxx-345 then these hopes are being dashed. This type of detective work can not normally be done because we do not get to see the primary data.

This is not a rare example..but a new one to use in my teaching on experimental design 1O1.